BTC2010 Basic stats

Another year, another billion triple dataset. This time it was released the same time my daughter was born, so running the stats script was delayed for a bit.

This year we’ve got a few more triples, perhaps making up for the fact that it wasn’t actually one billion last year :) we’ve now got 3.1B triples (or 3,171,793,030 if you want to be exact).

I’ve not had a chance to do anything really fun with this, so I’ll just dump the stats:

Subjects

- 159,185,186 unique subjects

- 147,663,612 occur in more than a single triple

- 12,647,098 more than 10 times

- 5,394,733 more 100

- 313,493 more than 1,000

- 46,116 more than 10,000

- and 53 more than 100,000 times

For an average of 19.9252 per unique triple. Like last year, I am not sure if having more than 100,000 triples with the same subject really is useful for anyone?

Looking only at bnodes used as subjects we get:

- 100,431,757 unique subjects

- 98,744,109 occur in more than a single triple

- 1,465,399 more than 10 times

- 266,759 more 100

- 4,956 more than 1,000

- 48 more than 10,000

So 100M out of 159M subjects are bnodes, but they are used less often than the named resources.

The top subjects are as follows:

| #triples | subject |

|---|---|

| 1,412,709 | http://www.proteinontology.info/po.owl#A |

| 895,776 | http://openean.kaufkauf.net/id/ |

| 827,295 | http://products.semweb.bestbuy.com/company.rdf#BusinessEntity_BestBuy |

| 492,756 | cycann:externalID |

| 481,000 | http://purl.uniprot.org/citations/15685292 |

| 445,430 | foaf:Document |

| 369,567 | cycann:label |

| 362,391 | dcmitype:Text |

| 357,309 | http://sw.opencyc.org/concept/ |

| 349,988 | http://purl.uniprot.org/citations/16973872 |

I do not know enough about the Proteine ontology to know why po:A is so popular. CYC we already had last year here, and I guess all products exposed by BestBuy have this URI as a subject.

Predicates

- 95,379 unique predicates

- 83,370 occur in more than one triples

- 46,710 more than 10

- 18,385 more than 100

- 5,395 more than 1,000

- 1,271 more than 10,000

- 548 more than 100,000

The average predicate occurred in 33254.6 triples.

| #triples | predicate |

|---|---|

| 557,268,190 | rdf:type |

| 384,891,996 | rdfs:isDefinedBy |

| 215,041,142 | gr:hasGlobalLocationNumber |

| 184,881,132 | rdfs:label |

| 175,141,343 | rdfs:comment |

| 168,719,459 | gr:hasEAN_UCC-13 |

| 131,029,818 | gr:hasManufacturer |

| 112,635,203 | rdfs:seeAlso |

| 71,742,821 | foaf:nick |

| 71,036,882 | foaf:knows |

The usual suspects, rdf:type, comment, label, seeAlso and a bit of FOAF. New this year is lots of GoodRelations data!

Objects – Resources

Ignoring literals for the moment, looking only at resource-objects, we have:

- 192,855,067 unique resources

- 36,144,147 occur in more than a single triple

- 2,905,294 more than 10 times

- 197,052 more 100

- 20,011 more than 1,000

- 2,752 more than 10,000

- and 370 more than 100,000 times

On average 7.72834 triples per object. This is both named objects and bnodes, looking at the bnodes only we get:

- 97,617,548 unique resources

- 616,825 occur in more than a single triple

- 8,632 more than 10 times

- 2,167 more 100

- 1 more than 1,000

Since BNode IDs are only valid within a certain file it is limited how often then can appear, but still almost half the overall objects are bnodes.

The top ten bnode IDs are pretty boring, but the top 10 named resources are:

| #triples | resource-object |

|---|---|

| 215,532,631 | gr:BusinessEntity |

| 215,153,113 | ean:businessentities/ |

| 168,205,900 | gr:ProductOrServiceModel |

| 167,789,556 | http://openean.kaufkauf.net/id/ |

| 71,051,459 | foaf:Person |

| 10,373,362 | foaf:OnlineAccount |

| 6,842,729 | rss:item |

| 6,025,094 | rdf:Statement |

| 4,647,293 | foaf:Document |

| 4,230,908 | http://purl.uniprot.org/core/Resource |

These are pretty much all types – compare to:

Types

A “type” being the object that occurs in a triple where rdf:type is the predicate gives us:

- 170,020 types

- 91,479 occur in more than a single triple

- 20,196 more than 10 times

- 4,325 more 100

- 1,113 more than 1,000

- 258 more than 10,000

- and 89 more than 100,000 times

On average each type is used 3277.7 times, and the top 10 are:

| #triples | type |

|---|---|

| 215,536,042 | gr:BusinessEntity |

| 168,208,826 | gr:ProductOrServiceModel |

| 71,520,943 | foaf:Person |

| 10,447,941 | foaf:OnlineAccount |

| 6,886,401 | rss:item |

| 6,066,069 | rdf:Statement |

| 4,674,162 | foaf:Document |

| 4,260,056 | http://purl.uniprot.org/core/Resource |

| 4,001,282 | http://data-gov.tw.rpi.edu/2009/data-gov-twc.rdf#DataEntry |

| 3,405,101 | owl:Class |

Not identical to the top resources, but quite similar. Lots of FOAF and new this year, lots of GoodRelations.

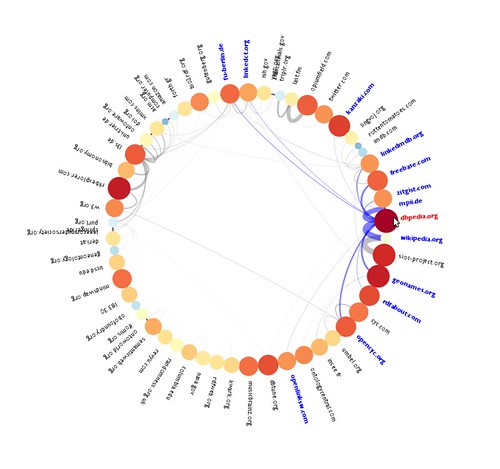

Contexts

Something changed with regard to context handling for BTC2010, this year we only have 8M contexts, last year we had over 35M.

I wonder if perhaps all of dbpedia is in one context this year?

- 8,126,834 unique contexts

- 8,048,574 occur in more than a single triple

- 6,211,398 more than 10 times

- 1,493,520 more 100

- 321,466 more than 1,000

- 61,360 more than 10,000

- and 4799 more than 100,000 times

For an average of 389.958 triples per context. The 10 biggest contexts are:

This concludes my boring stats dump for BTC2010 for now. Some information on literals and hopefully some graphs will come soon! I also plan to look into how these stats changed from last year – so far I see much more GoodRelations, but there must be other fun changes!