A student came see me recently, wanting to do a Diplomarbeit (i.e. a MSc++) on a learning technique called Hierarchical Temporal Memory or HTMs. He had very specific ideas about what he wanted, and has already worked with the algorithm in his Projektarbeit (BSc++). I knew nothing about the approach, but remembered this reddit post, which was less than enthusiastic. I spoke to the student and read up on the thing a bit, and it seem interesting enough. It’s claimed to be close the way the brain learns/recognizes patterns and to be a general model of intelligence and it will work for EVERYTHING. This reminded me of a few other things I’ve come across in the past years that claim to be the new Machine Learning algorithm with Capital A, i.e. the algorithm to end all other ML work, which will work on all problems, and so on. Here is a small collection of the three most interesting ones I remembered:

Hierarchical Temporal Models

HTMs are “invented” by Jeff Hawkin, whose track record includes making the Palm device and platform and later the Treo. Having your algorithm’s PR based on the celebrity status of the inventor is not really a good sign. The model is first presented in his book On Intelligence, which I’ve duly bought and am currently reading. The book is so far very interesting, although full of things like “[this is] how the brain actually works“, “Can we build intelligent machines? … Yes. We can and we will.”, etc. As far as I understand, the model from the book was formally analysed and became the HTM algorithm in Dileep George‘s thesis: How the brain might work: A hierarchical and temporal model for learning and recognition. He applies to recognizing 32×32 pictures of various letters and objects.

The model is based on a hierarchy of sensing components, each dealing with a higher level of abstraction when processing input, the top of the hierarchy feeds into some traditional learning algorithm, such as a SVM for classification, or some clustering mechanism. In effect, the whole HTM is a form of feature pre-processing. The temporal aspect is introduces by the nodes observing their input (either the raw input, or their sub-nodes) over time, this (hopefully) gives rise to translation, rotation and scale invariance, as the things you are watching move around. I say watching here, because computer-vision seems to be the main application, although it’s of course applicable to EVERYTHING:

HTM technology has the potential to solve many difficult problems in machine learning, inference, and prediction. Some of the application areas we are exploring with our customers include recognizing objects in images, recognizing behaviors in videos, identifying the gender of a speaker, predicting traffic patterns, doing optical character recognition on messy text, evaluating medical images, and predicting click through patterns on the web.

The guys went on to make a company called Numenta for spreading this technique, they have a (not open-source, boo!) development framework you can play with.

Normalised Compression Distance

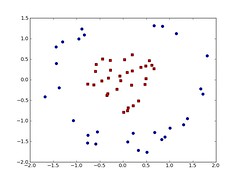

This beast goes under many names: compression based learning, compression-based dissimilarity measure, etc. The idea is in any case to reuse compression algorithms for learning, from good old DEFLATE algorithm from zip/gzip, to algorithms specific to some data-type, like DNA. The distance between things is then derived from how well they compress together with each other or with other data, and the distance metric can then be used for clustering, classification, anomaly detection, etc. The whole thing is supported by the theory of Kolmogorov Complexity and Minimum Description Length, i.e. it’s not just a hack.

I came across it back in 2003 in the Kuro5hin article Spam Filtering with gzip, back then I was very sceptical, thinking that any algorithm dedicated to doing classification MUST easily out-perform this. What I didn’t think about is that if you use the optimal compression for your data, then it finds all patterns in the data, and this is exactly what learning is about. Of course, gzip is pretty far from optimal, but it still works pretty well. I am not the only one who wasn’t convinced, this letter appeared in a physics journal in 2001, and led to some heated discussion: angry comment, angry reply, etc.

A bit later, I came across this again. Eamonn Keogh wrote Towards parameter-free data mining in 2004, this paper makes a stronger case for this method being simple, easy and great and applicable to EVERYTHING:

[The Algorithm] can be implemented using any off-the-shelf compression algorithm with the addition of just a dozen or so lines of code. We will show that this approach is competitive or superior to the state-of-the-art approaches in anomaly/interestingness detection, classification, and clustering with empirical tests on time series/DNA/text/video datasets.

A bit later again I came across Rudi Cilibrasi (his page is broken atm.) thesis on Statistical Inference Through Data Compression. He has more examples, more theory and most importantly open-source software for everything: CompLearn (also down atm., but there are packages in debian). The method is very nice in that it makes no assumptions about the format of the input, i.e. no feature vectors or similar. Here is a clustering tree generated from a bunch of different files types:

Markov Logic Networks

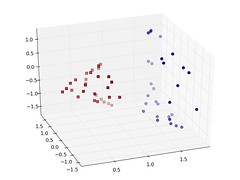

I first came across Markov Logic Networks in the paper: Automatically Refining the Wikipedia Infobox Ontology. Here they have two intertwined problems they use machine learning to solve, firstly they want to map wikipedia category pages to WordNet Synsets, and secondly they want to arrange the wikipedia categories in a hierarhcy, i.e. by learning is-a relationships between categories. The solve the problem in two ways, the traditional way by using training a SVM to do the WordNet mappings, and using these mappings as an additional features for training a second SVM to do the is-a learning. This is all good, and works reasonably well, but by using Markov Logic Networks they can use joint inference to tackle both tasks at once. This is good since the two problems are clearly not independent, and now evidence that two categories are related can feed back and improve the probability that the map to WordNet synsets that are also related. Also, it allows different is-a relations to influence each other, i.e. if Gouda is-a Cheese is-a Food, then Gouda is probaby also a Food.

The software used in the paper is made by the people at the University of Washington, and is available as open-source: Alchemy – algorithms for statistical relational learning and probabilistic logic inference. The system combines logical and statistical AI, building on network structures much like Bayesian belief networks, in the end it’s a bit like Prolog programming, but with probabilities for all facts and relations, and these probabilities can be learned from data. For example, this cheerful example about people dying of cancer, given this dependency network and some data about friends who influence each other to smoke and dying, you can estimate the probability that you smoke if your friend does and the probability that you will get cancer:

Since I am writing about it here, it is clear that this is applicable to EVERYTHING:

Markov logic serves as a general framework which can not only be used for the emerging field of statistical relational learning, but also can handle many classical machine learning tasks which many users are familiar with. […] Markov logic presents a language to handle machine learning problems intuitively and comprehensibly. […] We have applied it to link prediction, collective classification, entity resolution, social network analysis and other problems.

The others

Out of the three I think I find Markov Logic Network to be the most interesting, perhaps because it nicely bridges the symbolic and sub-symbolic AI worlds. This was my personal problem since I cannot readily dismiss symbolic AI as a Semantic Web person, but the more I read about about kernels, conditional random fields, online-learning using gradient descent etc. the more I realise that rule-learning and inductive logic programming probably isn’t going to catch up any time soon. NCD is a nice hack, but I tested it on clustering RDF resources, comparing the distance measure from my thesis with gzip’ping the RDF/XML or Turtle, and it did much worse. HTM still strikes me as a bit over-hyped, but I will of course be sorry when the bring the intelligent robots to market in 2011.

Some other learning frameworks that nearly made it into this post:

(wow – this got much longer than I intended)

http://www.idsia.ch/~juergen/